BABBLE_ Autonomous Vehicle System

BABBLE is an autonomous vehicle system within which the individual vehicles access their environment through, and communicate with, different senses. The title of the work is a reference to the biblical Tower of Babel, and the work playfully speculates on a scenario in which cybernetic agents, rather than communicating with machine-like efficiency, are trapped within different sensory and linguistic environments, doomed to misunderstand each other

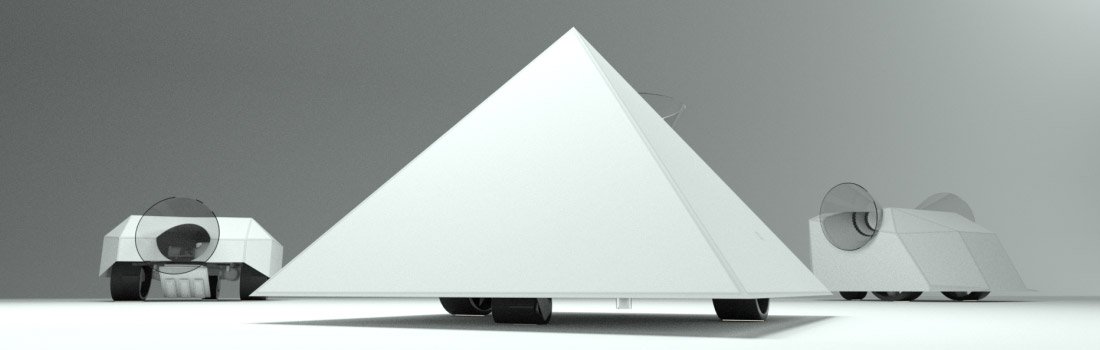

The work is formed of three separate robotic vehicles. Each vehicle has a method of “seeing”, a sensory mode that gives it information through which to plan its next move. The sensory modes are sympathetic, linked like a chain – the first robot leaves behind it marks on the ground; the next robot may see the marks on the ground and emits generative audio; the third robot listens for sound and attempts to head towards it. Through the variances in their sensory input, the connections between them may falter or fail entirely. While the system produces emergent moments of cohesion, these are transient with the system rapidly returning to a chaotic state.

Concept & Context

In creating this work I’ve been continuing my interest in non-human systems and emergent behaviour. With last term’s project I gained an appreciation of how much variance and noise may enter a single electronic sensing system; in that instance it was capacitive touch and environmental sensors being transformed through sonification.

To explore these themes further my goal was to create a node-based system, with each node contributing to the overall tableau. The topic of automation, and in particular autonomous vehicles, seemed like an appropriate lens through which to observe these ideas.

Automation is at the heart of our zeitgeist, with the looming arrival of autonomous vehicles being one of the key tenets of this technological shift. The discourse around this topic is heavy with a sense of inevitability – companies developing AI algorithms are highly valued (1), and futurists speculate on entire sectors of the economy being phased out due to the “rise of the robots.” (2)

I want to push back against this idea that these things are inevitable, or at least imminent, and have been drawing inspiration from both computational arts and the wider art world to inform my creative process.

“Autonomous Trap 001” (2017) by James Brindle is a conceptual piece (the work is described by the artist as a “Salt Ritual”) that plays with the idea that an AI’s view of the world may be hacked by receiving nonsensical input data. Specifically, the artist uses the line markings that symbolise “no entry”, and surrounds the car – giving the AI no way of escape: “trapping” it. The use of salt harkens back to ancient summoning rituals and religious themes.

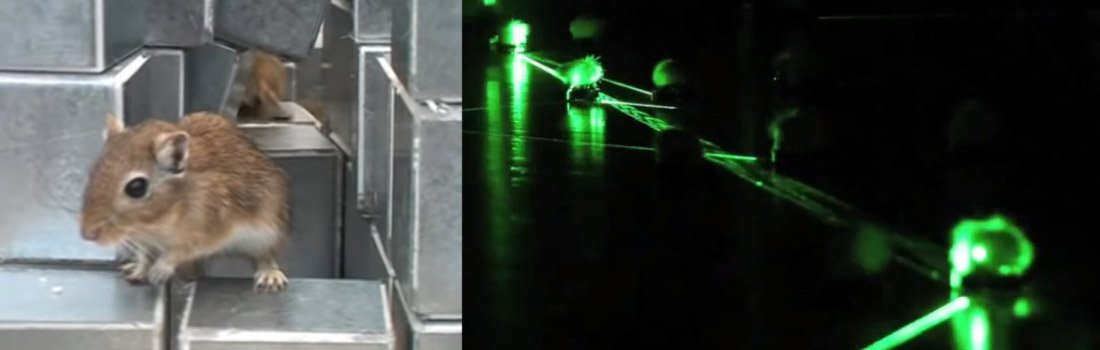

“Seek” (1969-70) by Nicholas Negroponte explores interaction between living and artificial agents, specifically a bunch of gerbils and a computer controlled arm that was continuously attempting to organise the environment the gerbils were living in. The emergent chaos that ensued is legendary: eventually the gerbils realised they could use their own excrement to gum up the workings of the arm to “defeat” its constant re-organisation of their landscape. The idea that a computational work might create a chaotic situation, beyond its programming, with an unforeseen outcome is exciting and prescient.

So Kanno’s work “Lasermice” (2019) is a swarm robotic installation that also explores the chaos and order found in nature through the use of 60 autonomous “mice”. Each mouse is a tiny vehicle that is not under the control of a central organising server. Rather, each mouse follows simple programmatic rules and sweeps a lazer light back and forth. The light may hit a photo-resistor on another mouse, and at this moment they synchronise audio beats, and the process begins again. The end result when multiplied across all 60 agents is the slow emergence of cohesion in a similar fashion to a flock of birds or a school of fish.

Other influences contributing to this work include the “action art” of Jackson Pollock and Willem de Kooning, and the novel Snow Crash by Neal Stephenson. In that Stephenson discusses the idea that a hacked version of language may affect the physiology (and behaviour) of the recipient.

Design process

Once my conceptual direction was established I began the explorative design process. Due to the lockdown some technical choices were made purely as a result of what components I had access to, and what I could order within the timeframe required. Some of my plans changed as I found that certain sensor / output pairings wouldn’t work together.

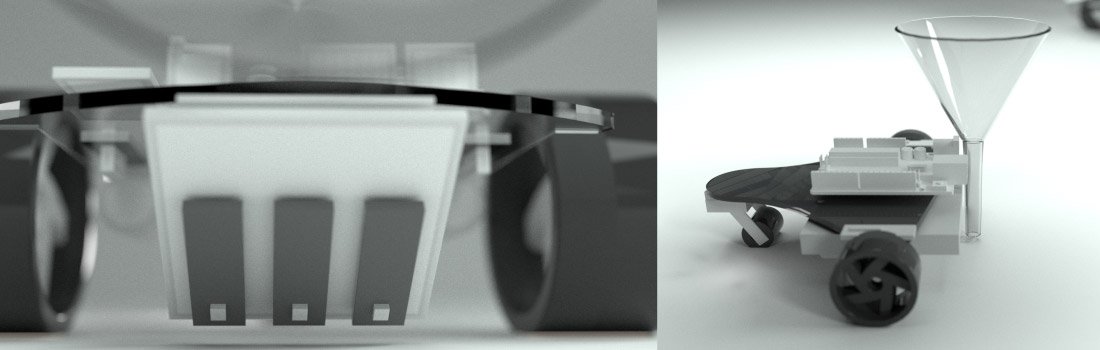

I sourced basic car components from the web; these come with pre laser cut bases and have documented build instructions. In particular I followed the instructions and code from Dronebot Workshop – these tutorials showed how to drive the motors and use interrupt sensors to determine RPM and speed. This base was then given custom sensors and code to create each node in the system.

In the early stages of development I was aiming on a heavier use of religious metaphor and ritualistic modes of communication. The first robot was the “prophet” – receiving signals from the world around it, it would plot the path for the “followers”. In practical terms, it would divine a pattern based on environmental sensing and then draw a path on the ground using salt released through a funnel.

The idea of planting large amounts of salt through my tiny kitchen just wasn’t possible, so I made the decision to switch to erasable markers combined with a plastic sheet on the floor. The IR line detection sensors (KY-033) were fairly reliable on bold dark lines on the floor. More on this later.

The first car’s path was initially based on a number of different types of environmental sensing. The temperature, light level and humidity would all drive a simple algorithm to generate mandala like patterns on the floor.

During testing this idea was simplified significantly. The main reason was that the Arduino Uno board had a limited number of digital inputs. The L298 motor driver requires 6 inputs from the UNO excluding ground and initially I was using 2 extra ports for the speed sensors. I split the power sources for each car, using a 9V battery to power the Arduino and 6xAAA batteries to power the motors through the motor driver.

Another design change was to add a HC-SR04 sensor to detect collisions. The driving algorithm I had written was not sophisticated enough to take into account the trapezoidal dimensions of my tiny kitchen and I wanted to make sure the car could navigate the room semi-intelligently.

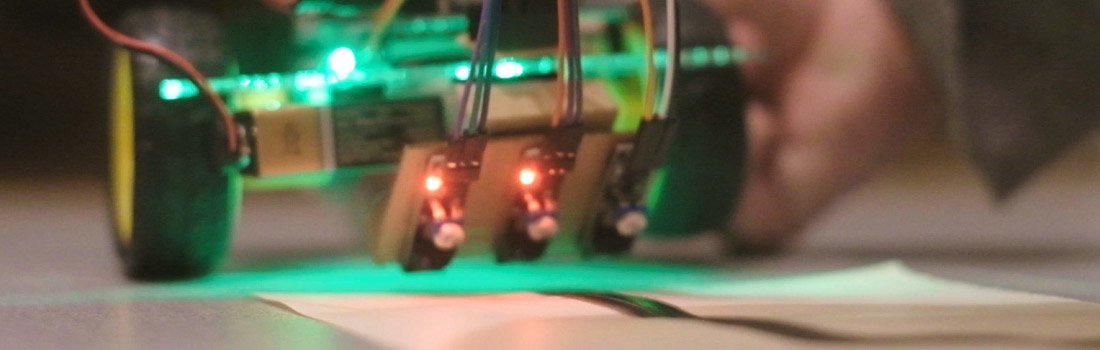

Design for the second car was based around the idea of the “disciple”. This car would follow the line set by the first car and communicate the direction of travel in a more diffuse fashion, in this case literally by using a mist diffuser. I had a KS-0203 steam detection sensor and love the mist works by Fujiko Nakaya, so my goal was to incorporate this into the work. Through testing it became apparent that the sensor was nowhere near sensitive enough to detect the vapour and as a result I decided to generate audio through an active buzzer and using microphones on the third car.

The second car now emits a random tone sweep at various intervals, as though it is “speaking in tongues”. The sound is also just loud enough to register over the various motor noises so when the cars are reasonably close together they interact.

The third car (the “follower”) uses microphones combined with conical “ears” to detect the direction of the loudest sound around it. Once it has rotated to be approximately facing the sound source, it takes tentative movements in that direction.

I went through a couple of versions of design for the “ears”. This was influenced by the objects I had at home, but it turns out that the top of a water bottle has excellent acoustics, balancing amplitude and directionality.

On the second car I added an IR controller to give me more debugging control while it was driving around. The IR sensors detect dark lines on light background and vice versa, so depending on the lighting conditions I had to be able to calibrate what “no input” was on the fly. Controls were added to adjust the speed and whether the car was just in a basic “go forward” mode vs using its sensory inputs to pick which direction to go.

The second and third cars required soldering small power boards, using strip board to fit in 10 female headers. I didn’t use the speed sensors in the end, as the distances being travelled were short and the cars moved so erratically it didn’t matter if they couldn’t keep a perfect straight line over a few metres.

Testing and Experimentation

The first round of testing was completed with each car individually. In became clear that the first car needed to turn when it was about to smash into a wall, the second car needed to move as slowly as possible to not completely skip over the lines on the ground, and the third car could really have done with front and back microphones as well.

I tweaked the code for the second and third cars, removing the speed sensors to simplify the driving control and to free up inputs for an active buzzer on the second car and a third microphone on the final car, to give it more sound input to process. The quality of my microphone sensors proved problematic. I had two KY-038 sensors which have adjustable sensitivity, however they still had different sensitivity levels after calibration. To counteract this I spent time coding their trigger states based on moving averages and “zeroed averages”. This method of analysing the sound removed the need to directly compare sensors. The end result worked, and it imbued the car with a tentative, nervous type of motion.

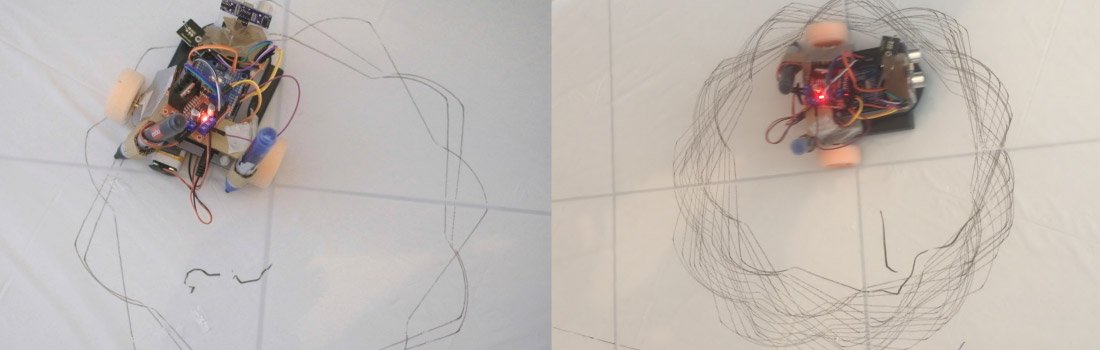

The final test was with the full automated system working together. For this test I covered the ground in a white plastic sheet with the aim of giving the line detection sensors as much contrast as possible to work with.

The first “drawing” car worked mostly successfully, creating a path around the space. The second car encountered issues which had to do with the thickness and visibility of the line it was trying to follow. The marker was not leaving a heavy enough trail and the line detection sensors couldn’t easily discern where to go next. I had to coax the sensors with manual input before it could lock back onto the line.

The next issue with the vehicle was the lack of collision detection. Because the line markings themselves were not heavy enough to maintain a lock onto the car would careen into the walls and require me to reposition it. It also collided with the line drawing robot, despite the line drawing robot’s best attempts to avoid the collision.

The third robot was listening out for where to go next, and seemed paralysed with fear. The audio inputs led it to often face the correct direction but were not loud enough to make it drive further towards the source.

At this point the drawing car seemed to malfunction (or, hear the voice of a higher power?) and started to draw a mandala. This emergent behaviour took us by surprise – my partner and I shared a “wtf glance” at each other as the pattern was revealed. My best guess for why this happened was the depth sensor had been bumped in a previous collision and was constantly triggering the avoid behaviour. It was a completely unpredicted moment and fantastic. The line detection car was careening back and forward trying to find something to follow, and the audio reactive vehicle was twitching left and right, paralysed by indecision, and between them both this single robot serenely built a beautiful image.

Following a further collision one of the textas was bumped into a 90 degree angle and suddenly it looked like the “prophet” drawing vehicle was participating in a knife fight. It had lost a wheel somehow and was at this point just driving in a hard circle, “knife” out. This was where we stopped the experiment.

Outcome

There are a number of aspects to this I would refine in a future iteration.

The most important area to refine would be the calibration and testing of each sensor pairing. The lines need to be created in a more “readable” manner, the sound needs to be easier to read via the microphones. The chaos in the system is still desirable but each robot should be able to clearly sense and respond within its own “language.”

It would be interesting to make more copies of each robot in a larger system. The markings left on the floor would be an interesting artefact in itself. Also, I would update the system so that the third robot and first robot are linked somehow, making it a form of “closed loop”.

Finally, I would build cases for the robots, to visually simplify the scene and make them more robust.

This work was about automation, control and chaos, and I feel it successfully explored those themes. The moment where the drawing robot started to draw meaningful patterns completely surprised me. The system was designed to explore miscommunication, broken input data and yet despite all that it was still able to generate a sense of wonder and surprise within the emergent behaviours.

References

- Abuelsamid, S. (n.d.). Waymo’s $30 Billion Valuation Shows The New Reality Of Automated Driving Is Sinking In. [online] Forbes. Available at: https://www.forbes.com/sites/samabuelsamid/2020/03/06/waymos-30b-valuation-shows-the-new-reality-of-automated-driving-is-sinking-in/.

- Blake, M. (2018). The Robots Are Coming, and They Want Your Job. [online] Vice. Available at: https://www.vice.com/en_uk/article/kz5a73/the-robots-are-coming-and-they-want-your-job.

- jamesbridle.com. (n.d.). James Bridle / Autonomous Trap 001. [online] Available at: http://jamesbridle.com/works/autonomous-trap-001.

- cyberneticzoo.com. (2010). 1969-70 – SEEK – Nicholas Negroponte (American). [online] Available at: http://cyberneticzoo.com/robots-in-art/1969-70-seek-nicholas-negroponte-american/.

- So Kanno, Lasermice | kanno.so. [online] Available at: http://kanno.so/lasermice/.

- Jackson Pollock – Autumn Rhythm (Number 30) – Metmuseum.org. (2019). [online] Available at: https://www.metmuseum.org/art/collection/search/488978.

- Neal Stephenson – Snow Crash – Wikipedia. (2020). Snow Crash. [online] Available at: https://en.wikipedia.org/wiki/Snow_Crash.

- Workshop, D. (2017). Build a Robot Car with Speed Sensors using Arduino Interrupts. [online] DroneBot Workshop. Available at: https://dronebotworkshop.com/robot-car-with-speed-sensors/ [Accessed 8 May 2020].